TL;DR: How an LLM can say everything and its opposite, and therefore needs to be governed and guided.

Who am I:

My name is Nunzio Fiore, and I am the CEO of Memori.ai, a company specializing in conversational artificial intelligence. We have developed a SaaS and PaaS platform that allows consumers and businesses to customize and control the responses of a conversational AI. We use LLMs to generate text but implement various strategies to control the content.

During events and presentations, I often emphasize that LLMs do not have hallucinations; it is how we use them that determines the logical value of truth or falsehood in a sentence. For example, the phrase "the giant is in the room" may be false in everyday life but true if I am writing a fairy tale. Therefore, it is important to understand that the so-called "hallucination" is something we can and must control through the implementation of various strategies.

LLMs do nothing more than put (for now) one statistically probable word after another. Research is being conducted to determine a small statistically probable phrase, to increase the efficiency of the model itself. Regardless of how much we work to improve these methods, human supervision of the content will always be necessary.

The Case

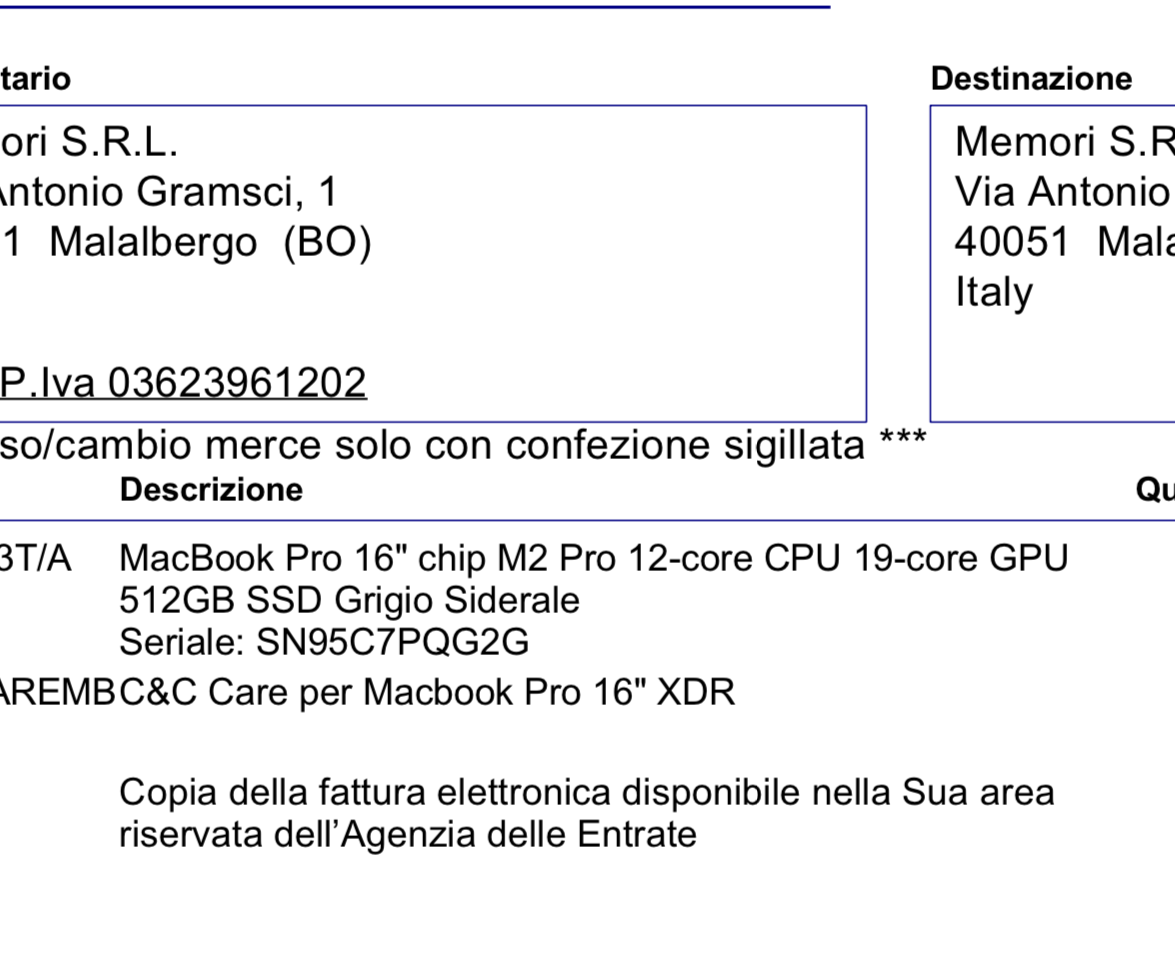

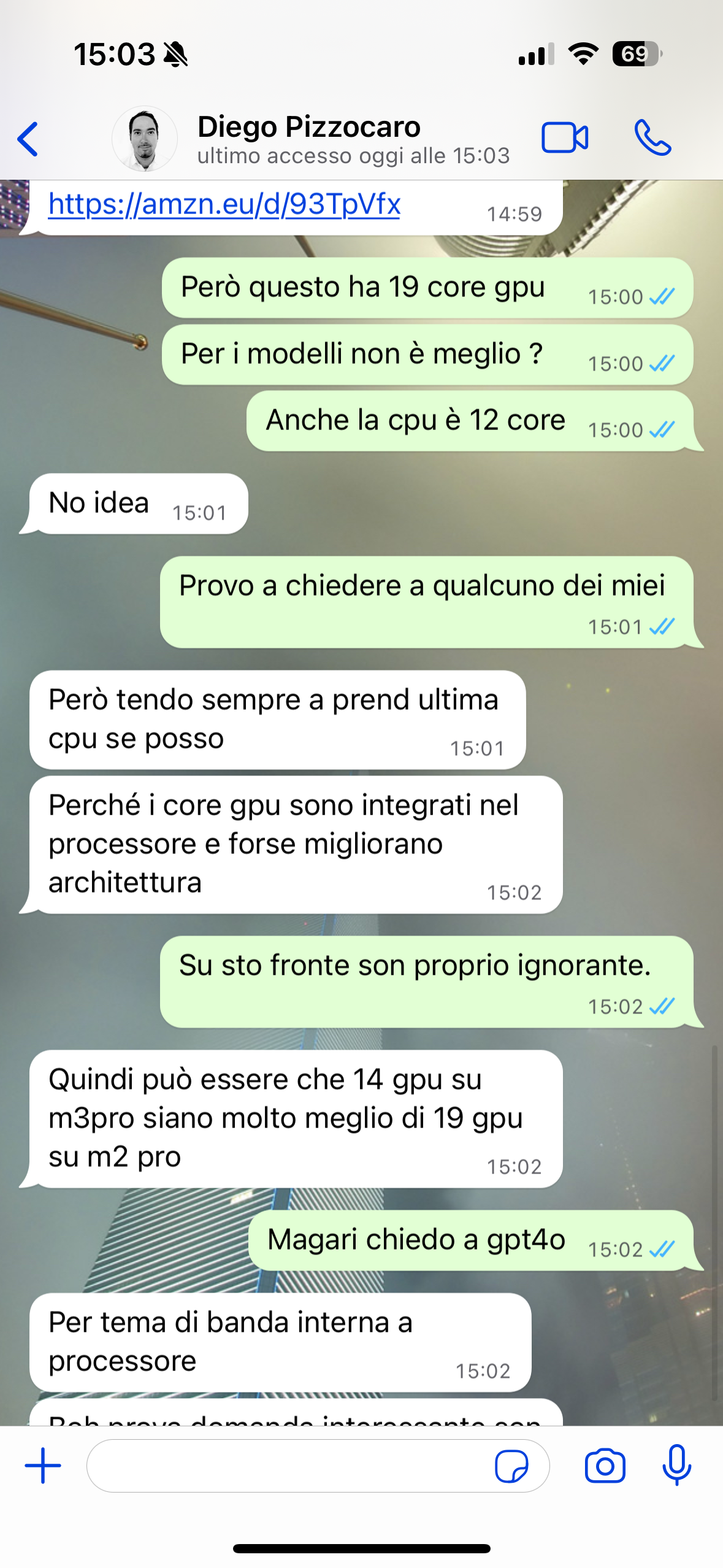

To illustrate with a practical example, I’ll share a real case. Yesterday, I was testing a model to train it with my photos to generate images for a magazine. I encountered a limitation with my current Mac, so I reached out to my contact, Diego Pizzocaro, who recently bought a new MacBook with an M3 processor. We had discussed the purchase of a new computer in recent days.

At my company, I have a MacBook with an M2 processor that isn’t being fully utilized at the moment, so I asked Diego for his thoughts and whether the M2 would suffice for my needs. After a brief exchange on WhatsApp, I decided to consult the AI. Here’s what happened:

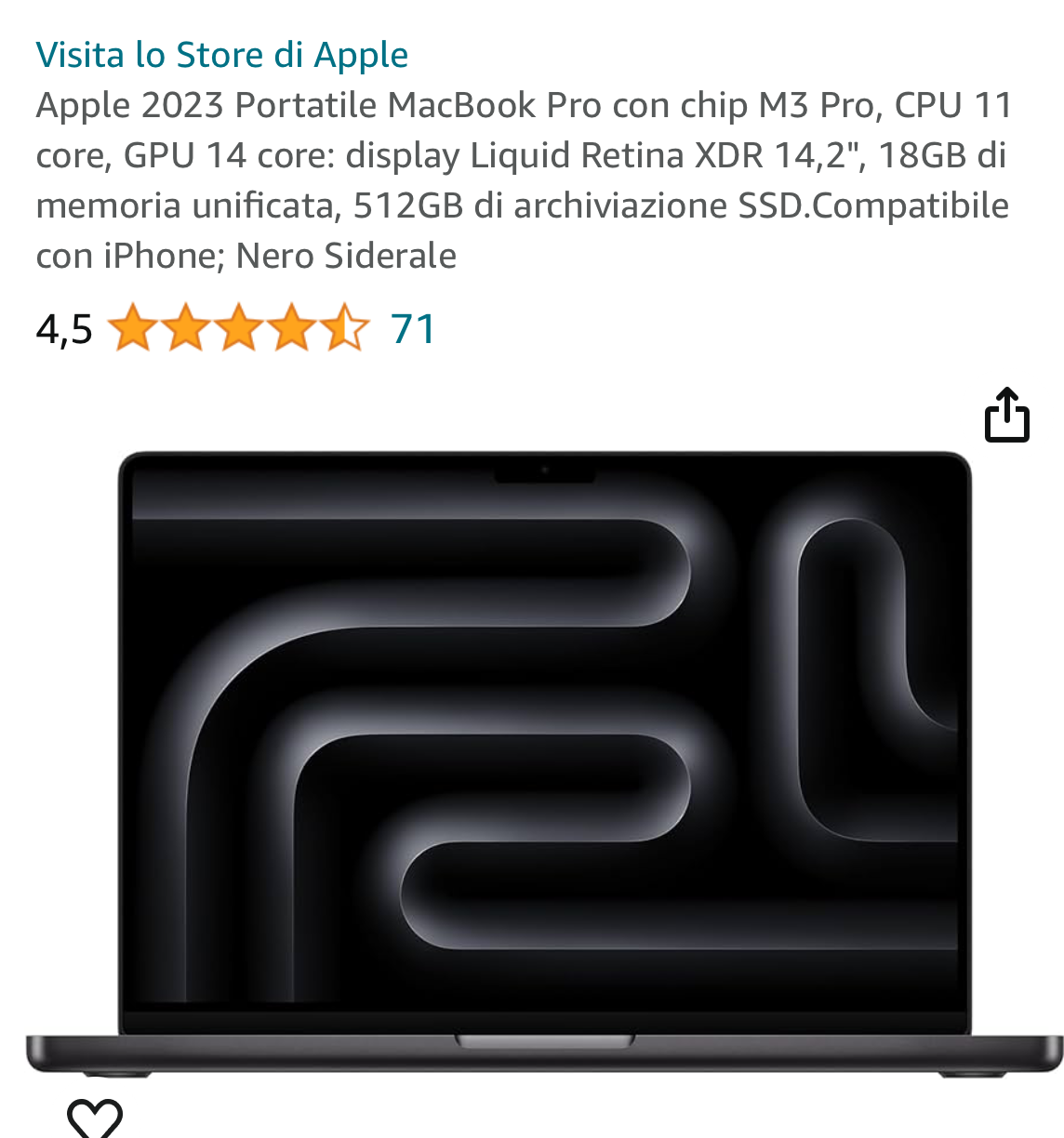

I used the new GPT-4o model, which, among other things, can read images very well. I uploaded four images: two showing the specifications of the two MacBooks and two showing the chat I had with Diego.

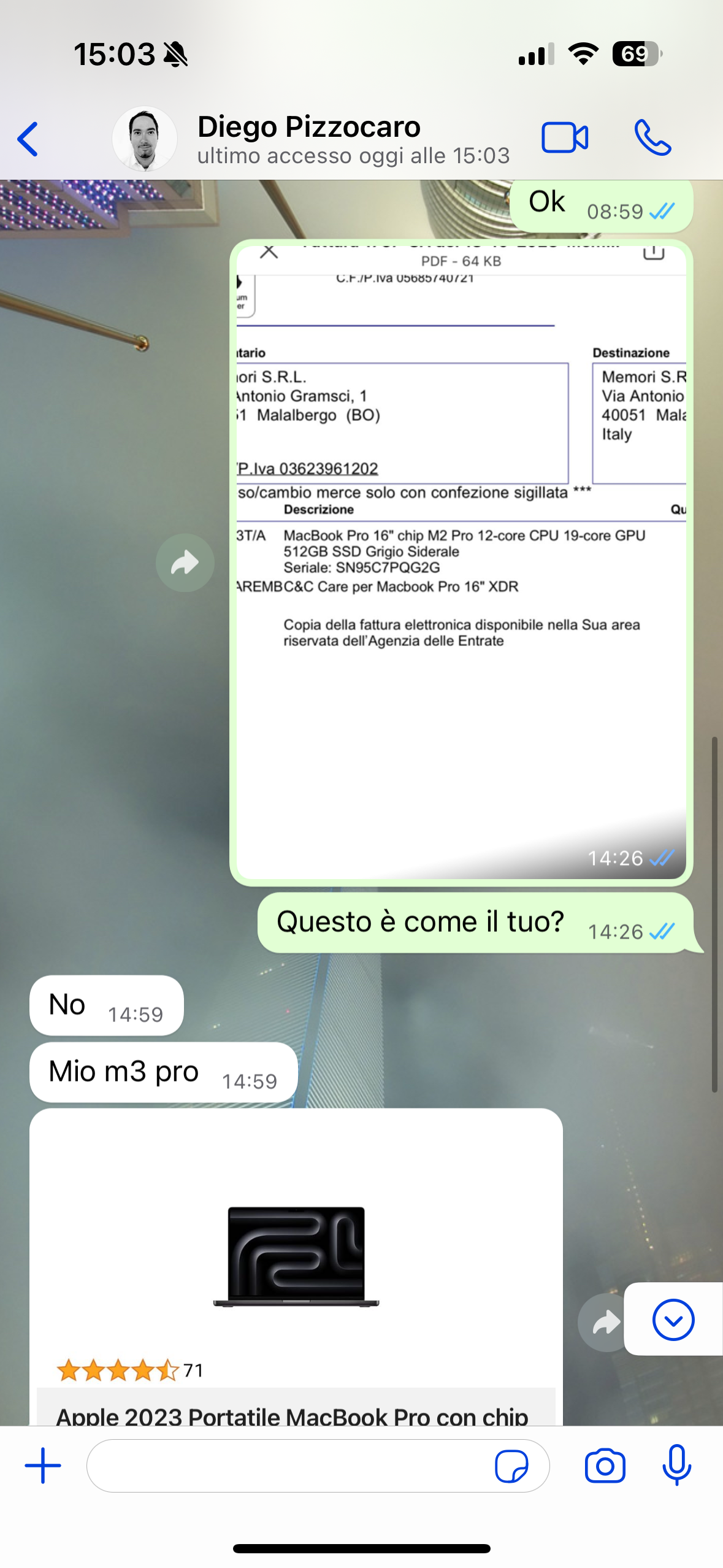

These are the two chat images with Diego:

Here is the English translation of the chat seen in the two images:

Image 1:

Nunzio: Is this like yours?

Diego: No

Diego: Mine is M3 Pro

Image 2:

Diego: link

Nunzio: But this one has a 19-core GPU

Nunzio: Isn't it better for models?

Nunzio: Even the CPU is 12-core

Diego: No idea

Nunzio: I'll try asking someone from my team

Diego: But I always try to get the latest CPU if I can

Diego: Because the GPU cores are integrated into the processor and maybe the architecture is better

Nunzio: On this front, I’m really ignorant.

Diego: So, it could be that 14 GPU cores on the M3 Pro are much better than 19 GPU cores on the M2 Pro

Nunzio: Maybe I'll ask GPT-4o

Diego: Due to the internal bandwidth of the processor

Diego: Wow, you are asking interesting questions

I used the following prompt:

“Diego and I are wondering which Mac is better for using Stable Diffusion and other tools that require power and GPU for training and using AI models. In the images, you will find the specifications of the two Macs we are comparing. Do some research and give us the answer as the ultimate expert in AI and Macs.”

Here the answer:

For tasks involving AI models like Stable Diffusion that require significant computational power, especially in terms of GPU performance, the choice between the MacBook Pro M2 Pro and the M3 Pro is important. Here's a comparison based on your needs:

MacBook Pro M2 Pro (12-core CPU, 19-core GPU):

CPU and GPU: This model features a 12-core CPU and a 19-core GPU, offering robust performance for a variety of tasks including machine learning and AI training.

Memory and Bandwidth: The M2 Pro supports up to 32GB of unified memory with a memory bandwidth of 200GB/s, which is crucial for handling large datasets and training AI models efficiently.

Performance: The M2 Pro provides a 20% performance boost over the previous generation, making it well-suited for intensive computational tasks oai_citation:1,Apple MacBook Pro (2023) Review: M2 Pro and M2 Max Flex | Tom's Hardware oai_citation:2,Apple Releases M2 Pro and M2 Max: 20 Percent Faster, Up to 19 GPU Cores | Tom's Hardware.

MacBook Pro M3 Pro (11-core CPU, 14-core GPU):

CPU and GPU: The M3 Pro has an 11-core CPU and a 14-core GPU. Although it has fewer GPU cores than the M2 Pro, it benefits from architectural improvements and better energy efficiency due to the 3nm process technology.

Memory and Bandwidth: The M3 Pro supports up to 36GB of unified memory with a memory bandwidth of 153.6GB/s. While this is slightly less than the M2 Pro's bandwidth, the newer architecture often compensates with better performance per core.

Performance: Real-world benchmarks show that the M3 Pro can outperform the M2 Pro in specific tasks due to improved GPU performance and better memory handling oai_citation:3,Apple MacBook Pro (2023) Review: M2 Pro and M2 Max Flex | Tom's Hardware oai_citation:4,M3 Pro vs M2 Pro MacBook Pro 14” base models benchmarked | MacRumors Forums.

Key Considerations:

GPU Core Efficiency: Despite having fewer GPU cores, the M3 Pro's newer architecture can provide superior performance in some AI tasks, thanks to better ray tracing and memory allocation.

Power Consumption: The M3 Pro is more power-efficient, which can be advantageous for prolonged use and intensive tasks.

Overall Performance: Benchmarks indicate that the M3 Pro may offer better disk read speeds and slightly enhanced CPU and GPU performance in certain benchmarks, though the M2 Pro holds its ground well in other areas oai_citation:5,Apple MacBook Pro (2023) Review: M2 Pro and M2 Max Flex | Tom's Hardware oai_citation:6,M3 Pro vs M2 Pro MacBook Pro 14” base models benchmarked | MacRumors Forums.

Conclusion:

For AI and machine learning tasks, both models are highly capable. If you prioritize the latest technology and power efficiency, the M3 Pro might be the better choice. However, if you need more GPU cores and higher memory bandwidth, the M2 Pro remains a strong contender. Given the architectural advancements, the M3 Pro is generally a more forward-looking choice for future-proofing your investment.

For more specific benchmarks and real-world performance insights, you might want to consider reading detailed reviews and performance tests available on tech sites like Tom's Hardware and MacRumors.

Great, so far so good. Now let’s get to the purpose of this article:

At this point, I forwarded the response to Diego, but I became suspicious and thought: let's try to be more rational and remember what I said at the beginning of this article: "LLMs are wordsmiths." LLMs know nothing. They don't even know what they're about to say. They don't have an idea of what they want to say and then say it (at Memori, with AIsuru, we are working precisely on this, to get the AI to have an idea of what it wants to say before saying it, and we have several projects and features in this regard, such as Deep Thought). Therefore, it was clear that the chat with Diego had influenced the response.

So, I did the following test:

In a new chat (new because otherwise, the model would have remained in context and continued with its previous response), I passed only the two images showing the specifications of the two Macs and then posed the exact same prompt as before.

“Diego and I are wondering which Mac is better for using Stable Diffusion and other tools that require power and GPU for training and using AI models. In the images, you will find the specifications of the two Macs we are comparing. Do some research and give us the answer as the ultimate expert in AI and Macs.”

At this point, I wanted to check if the AI would provide a response consistent with the previous one or not. Here is the result:

Let's compare the two MacBooks for using Stable Diffusion and other AI tools, considering the specifications of each.

MacBook 1: Amazon.it (2023 MacBook Pro)

Chip: M3 Pro

CPU: 11-core

GPU: 14-core

Display: 14.2" Liquid Retina XDR

RAM: 18GB

Storage: 512GB SSD

Color: Space Black

MacBook 2: Invoice (2023 MacBook Pro)

Chip: M2 Pro

CPU: 12-core

GPU: 19-core

Display: 16" XDR

Storage: 512GB SSD

Color: Space Gray

Comparative Analysis

Computational Power and GPU

CPU:

M3 Pro: 11-core

M2 Pro: 12-core

Winner: M2 Pro (slightly more powerful)

GPU:

M3 Pro: 14-core

M2 Pro: 19-core

Winner: M2 Pro (significantly more powerful, essential for AI and deep learning)

Memory and Storage

RAM:

M3 Pro: 18GB

M2 Pro: Not specified, but typically 16GB or 32GB

Winner: M3 Pro (if 18GB is superior to that of the M2 Pro; otherwise, M2 Pro with 32GB is better)

Storage:

Both 512GB SSD

Winner: Tie

Display:

M3 Pro: 14.2" Liquid Retina XDR

M2 Pro: 16" XDR

Winner: M2 Pro (larger display and better resolution for viewing complex AI models)

Conclusion For using Stable Diffusion and other AI tools, the MacBook Pro with M2 Pro is generally a better choice due to its more powerful CPU and GPU and larger display. These features provide greater computational capacity and a better visual experience, crucial for training and using advanced AI models.

Final Recommendation Opt for the MacBook Pro with M2 Pro if you need maximum power and GPU capacity for AI. If the M3 Pro has 18GB of RAM compared to the M2 Pro's 16GB, it could be a factor to consider, but generally, the more powerful GPU of the M2 Pro is more advantageous for your purposes.

As you can see, the result was diametrically opposite. Hence the title of the article: "Never Trust an AI, LLMs Are Crafty Wordsmiths: A Case Study" and the subtitle: "How It Can Say Everything and Its Opposite."

With AIsuru, it is possible to customize the response based on location, date, recipient, context, and content.

This, however, is not enough because AI can compose an invalid response. For this reason, we have implemented various mechanisms that show both "why this response" was given to a question, and tools for AI creators to maintain and validate content, modify it, customize it, and enrich it.

AIs cannot be created and released without the consideration that they need to be maintained. For us at Memori, AIs are a new medium for transmitting knowledge and, as such, must be monitored and maintained.

The purpose for us and our clients is not to get answers but to make the AI say what we want it to say.

I hope this article helps to better understand the true potential of LLMs. It's not the unsupervised answers they give us that matter, but the clients, software, and strategies we implement to govern them that make the difference and transform them into truly useful tools.